Synheart Binary Emotion Classification Models (ONNX)

ONNX models for on-device binary emotion classification from HRV features.

This repository contains pre-trained ONNX models for inferring momentary affective states (Baseline vs Stress) from Heart Rate Variability (HRV) features. These models are part of the Synheart Emotion SDK ecosystem, enabling privacy-first, real-time emotion inference directly on device.

Made by Synheart AI - Technology with a heartbeat.

Model Performance

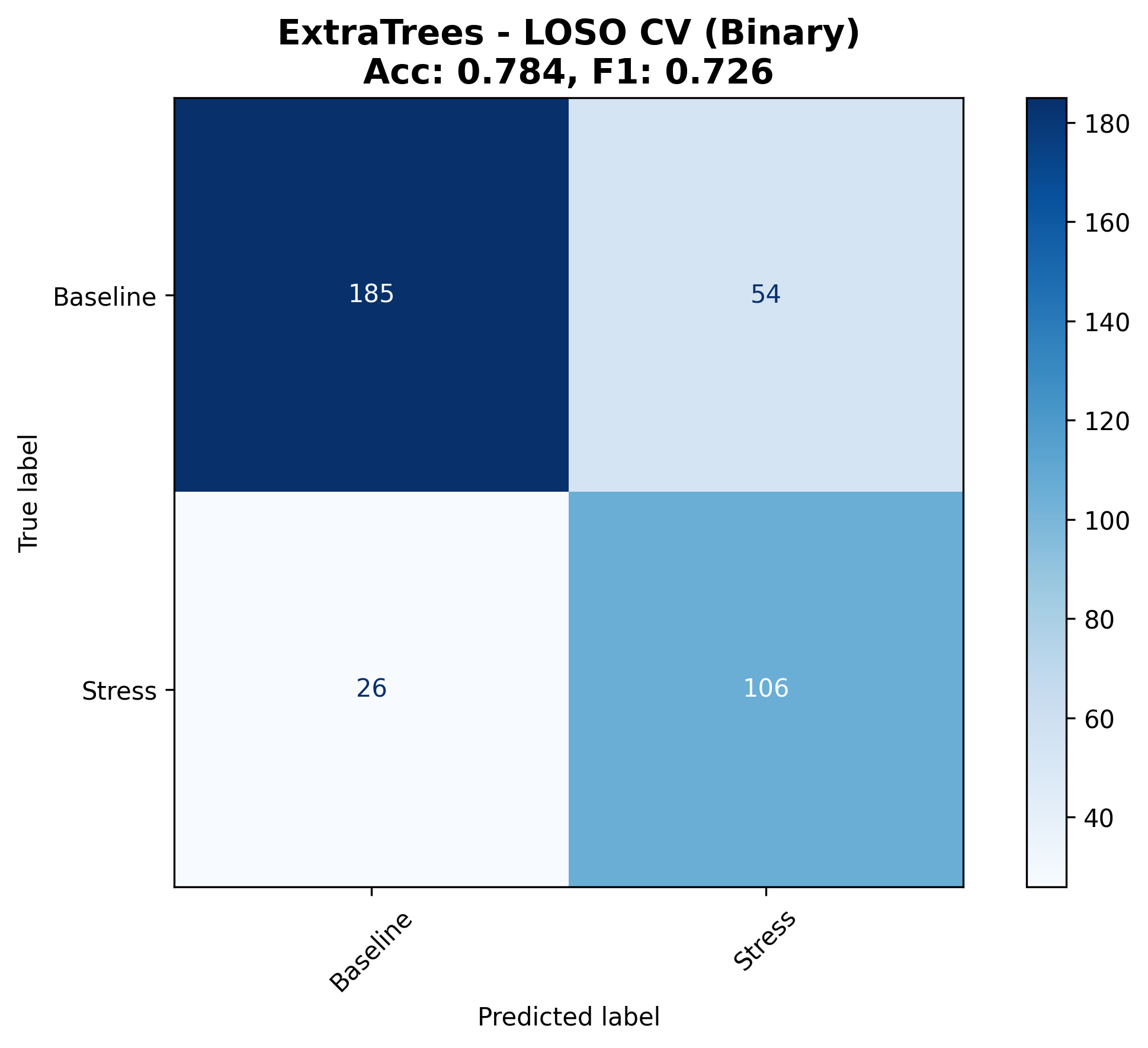

ExtraTrees 120_60 Model (trained on WESAD dataset):

- Accuracy: 78.44%

- F1-Score: 72.60%

These models are research-based and trained on the WESAD dataset with Leave-One-Subject-Out (LOSO) cross-validation. The models output probabilistic class scores representing state tendencies, not ground-truth emotional labels.

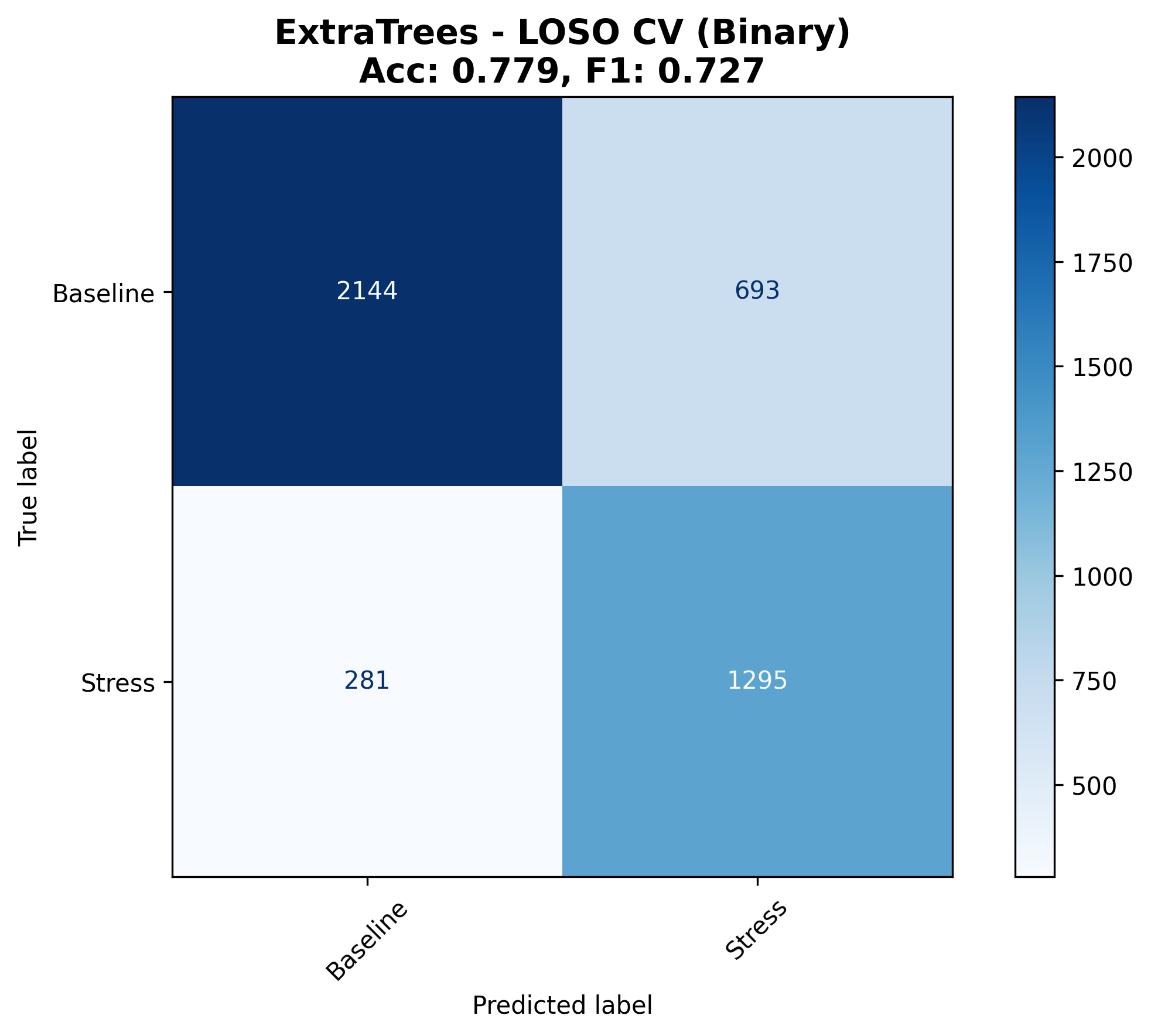

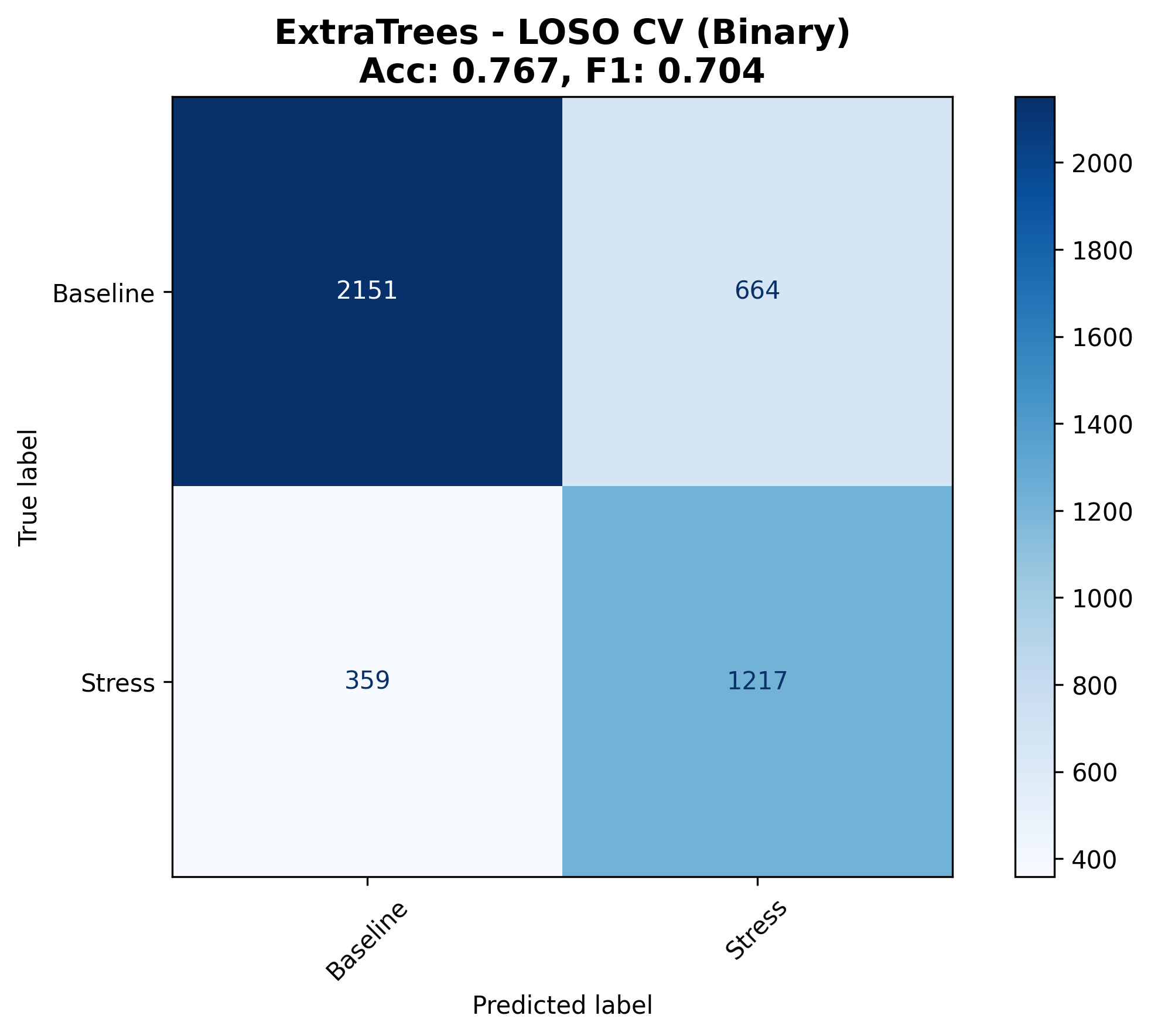

Confusion Matrices

Performance evaluation results (LOSO cross-validation) for each model configuration:

w120s60_binary (Window: 120s, Step: 60s) - Default Model

w120s5_binary (Window: 120s, Step: 5s)

w60s5_binary (Window: 60s, Step: 5s)

Installation

pip install onnxruntime numpy

Quick Start

import numpy as np

import onnxruntime as ort

import json

from pathlib import Path

class WesadInferenceEngine:

def __init__(self, model_path, metadata_path=None):

# Initialize ONNX Runtime session

self.session = ort.InferenceSession(str(model_path))

self.input_name = self.session.get_inputs()[0].name

# Default labels

self.label_order = ["Baseline", "Stress"]

# Load metadata if provided

if metadata_path and Path(metadata_path).exists():

with open(metadata_path, 'r') as f:

self.metadata = json.load(f)

print(f"Model Loaded: {self.metadata.get('model_id', 'Unknown')}")

def predict(self, hrv_features):

"""

Predict emotion class from HRV features.

Args:

hrv_features: list or np.array of 14 features in order:

['RMSSD', 'Mean_RR', 'HRV_SDNN', 'pNN50', 'HRV_HF', 'HRV_LF',

'HRV_HF_nu', 'HRV_LF_nu', 'HRV_LFHF', 'HRV_TP', 'HRV_SD1SD2',

'HRV_Sampen', 'HRV_DFA_alpha1', 'HR']

Returns:

tuple: (predicted_label, confidence_score)

"""

input_data = np.array(hrv_features, dtype=np.float32).reshape(1, -1)

outputs = self.session.run(None, {self.input_name: input_data})

predicted_idx = int(outputs[0][0])

probabilities = outputs[1][0]

label_name = self.label_order[predicted_idx]

confidence = probabilities[predicted_idx]

return label_name, confidence

# Usage Example

if __name__ == "__main__":

MODEL_FILE = "w120s60_binary/models/ExtraTrees.onnx"

METADATA_FILE = "w120s60_binary/models/ExtraTrees_metadata.json"

# Example HRV features (14 features in correct order)

example_input = [35.5, 950.2, 55.1, 15.2, 430.0, 620.0,

0.42, 0.78, 1.45, 1450.0, 0.72, 1.3, 1.1, 91.0]

engine = WesadInferenceEngine(MODEL_FILE, METADATA_FILE)

label, confidence = engine.predict(example_input)

print(f"Predicted Label: {label}")

print(f"Confidence: {confidence:.2%}")

Repository Structure

├── w120s60_binary/ # Window: 120s, Step: 60s

│ ├── models/

│ │ ├── ExtraTrees.onnx

│ │ └── ExtraTrees_metadata.json

│ └── figures/

│ └── confusion_matrix_ExtraTrees_loso_binary.png

├── w120s5_binary/ # Window: 120s, Step: 5s

│ ├── models/

│ │ ├── ExtraTrees.onnx

│ │ ├── LogReg.onnx

│ │ ├── RF.onnx

│ │ └── [metadata files]

│ └── figures/

│ ├── confusion_matrix_ExtraTrees_loso_binary.png

│ ├── confusion_matrix_LinearSVM_loso_binary.png

│ ├── confusion_matrix_LogReg_loso_binary.png

│ ├── confusion_matrix_RF_loso_binary.png

│ └── confusion_matrix_XGB_loso_binary.png

└── w60s5_binary/ # Window: 60s, Step: 5s

├── models/

│ ├── ExtraTrees.onnx

│ └── ExtraTrees_metadata.json

└── figures/

└── confusion_matrix_ExtraTrees_loso_binary.png

Input Features

The models expect 14 HRV features in this exact order:

- RMSSD

- Mean_RR

- HRV_SDNN

- pNN50

- HRV_HF

- HRV_LF

- HRV_HF_nu

- HRV_LF_nu

- HRV_LFHF

- HRV_TP

- HRV_SD1SD2

- HRV_Sampen

- HRV_DFA_alpha1

- HR

Output

- Labels:

"Baseline"or"Stress" - Confidence: Probability score for the predicted class

About Synheart

Synheart is an AI company specializing in on-device human state intelligence from physiological signals. Our emotion inference models are part of a comprehensive SDK ecosystem that includes:

- Multi-Platform SDKs: Dart/Flutter, Python, Kotlin, Swift

- On-Device Processing: All inference happens locally for privacy

- Real-Time Performance: Low-latency emotion detection from HR/RR signals

- Research-Based: Models trained on validated datasets (WESAD)

Using with Synheart Emotion SDKs

For production applications, we recommend using the official Synheart Emotion SDKs which provide:

- ✅ Complete feature extraction pipeline (HR/RR → 14 HRV features)

- ✅ Thread-safe data ingestion

- ✅ Sliding window management

- ✅ Unified API across all platforms

- ✅ Integration with Synheart Core (HSI)

SDK Repositories:

- Python SDK -

pip install synheart-emotion - Dart/Flutter SDK

- Kotlin SDK

- Swift SDK

Direct ONNX Usage

This repository is ideal if you:

- Want to use the models directly with ONNX Runtime

- Have your own feature extraction pipeline

- Need to integrate into custom inference systems

- Are doing research or experimentation

Links

- Synheart AI: synheart.ai

- Synheart Emotion: Main Repository

- Documentation: Full SDK Documentation

License

These models are part of the Synheart Emotion ecosystem. Please refer to the main Synheart Emotion repository for licensing information.

Made with ❤️ by Synheart AI