PanelPainter-Project

PanelPainter-Project is an open-source initiative to automate black-and-white manga coloring using fine-tuned LoRAs.

This project is dedicated to training LoRAs to automate the coloring of black-and-white manga panels. I am releasing all the files here, including datasets, logs, and experimental versions, so others can see exactly how it was trained.

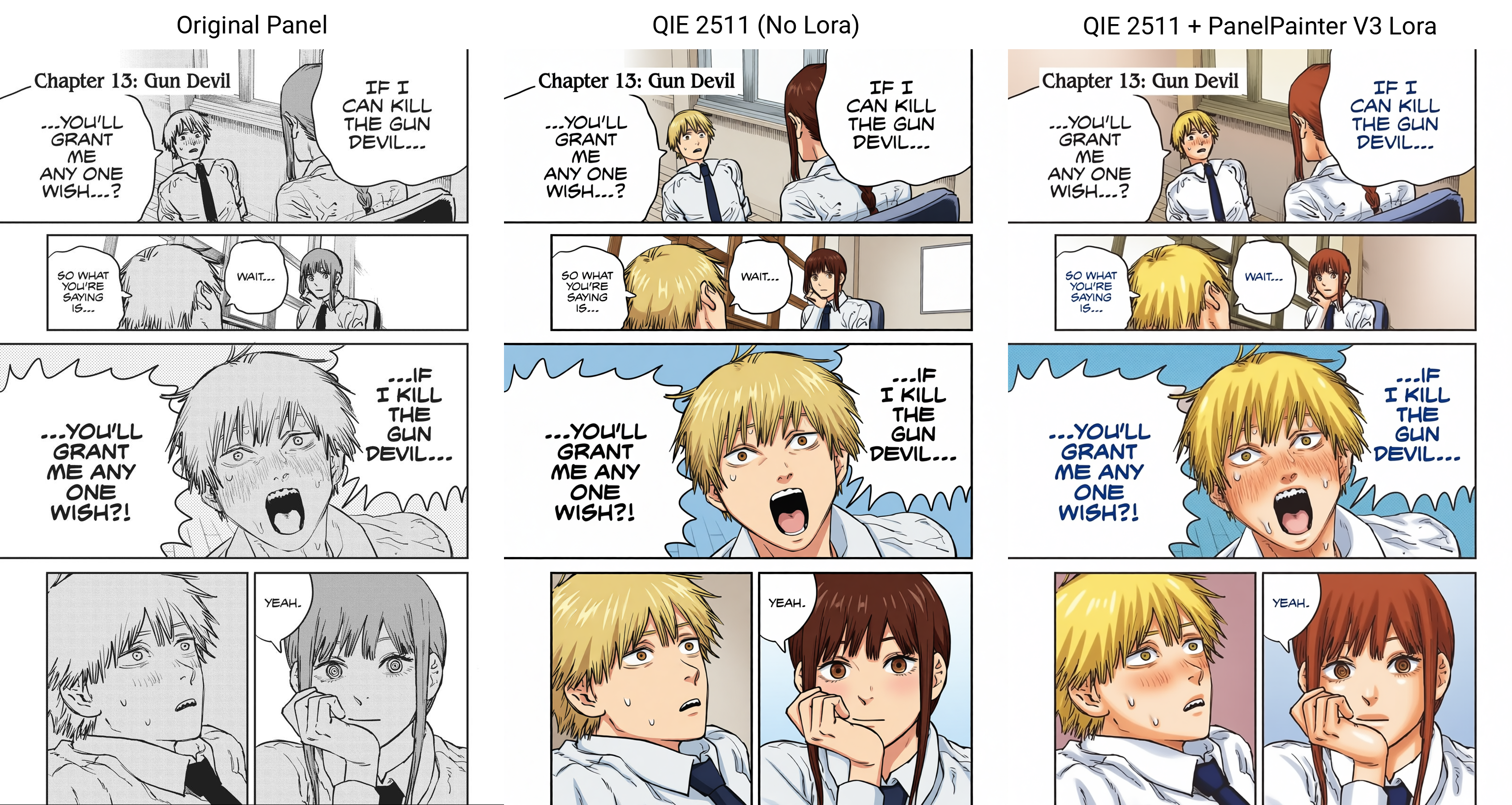

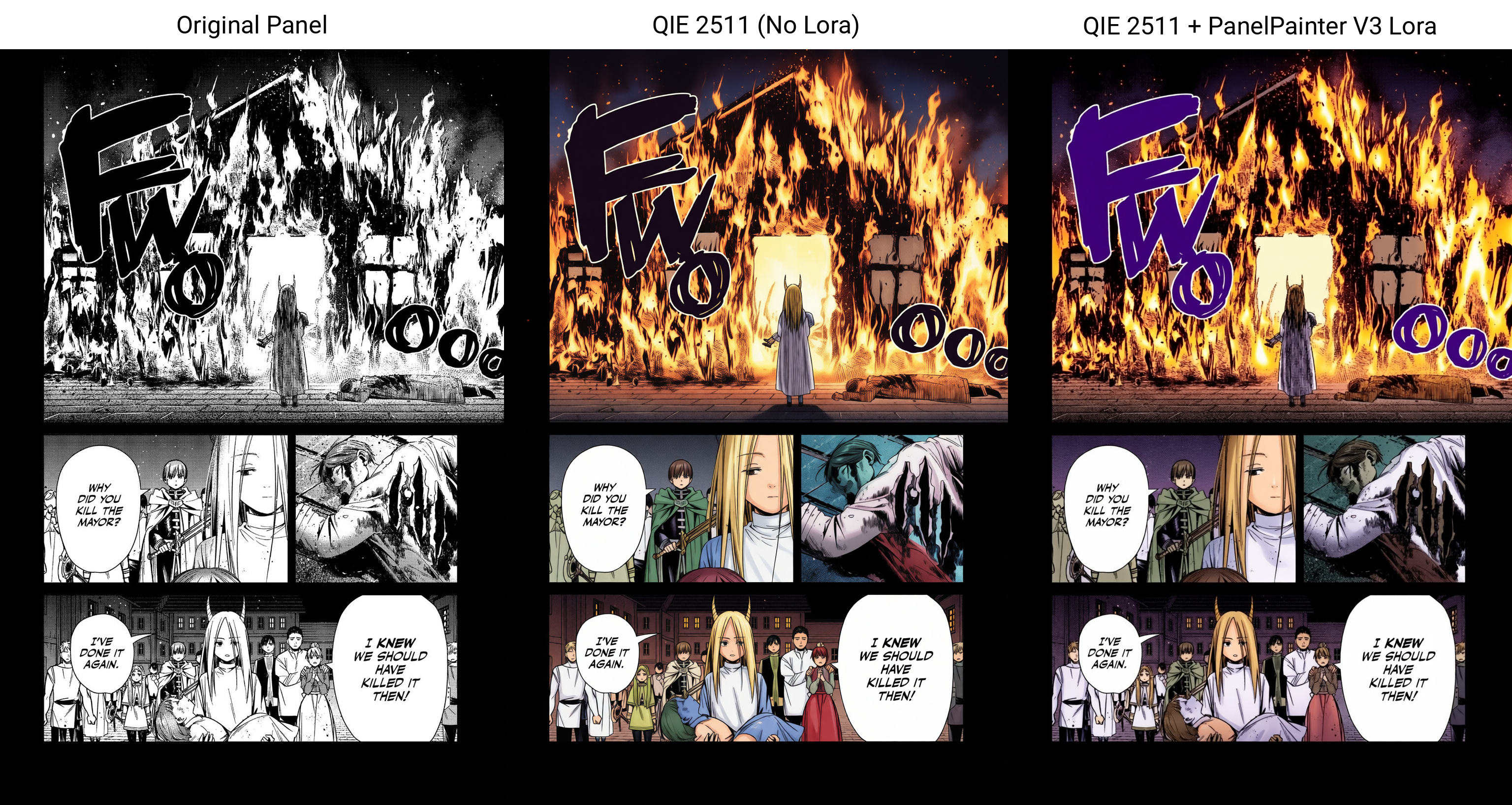

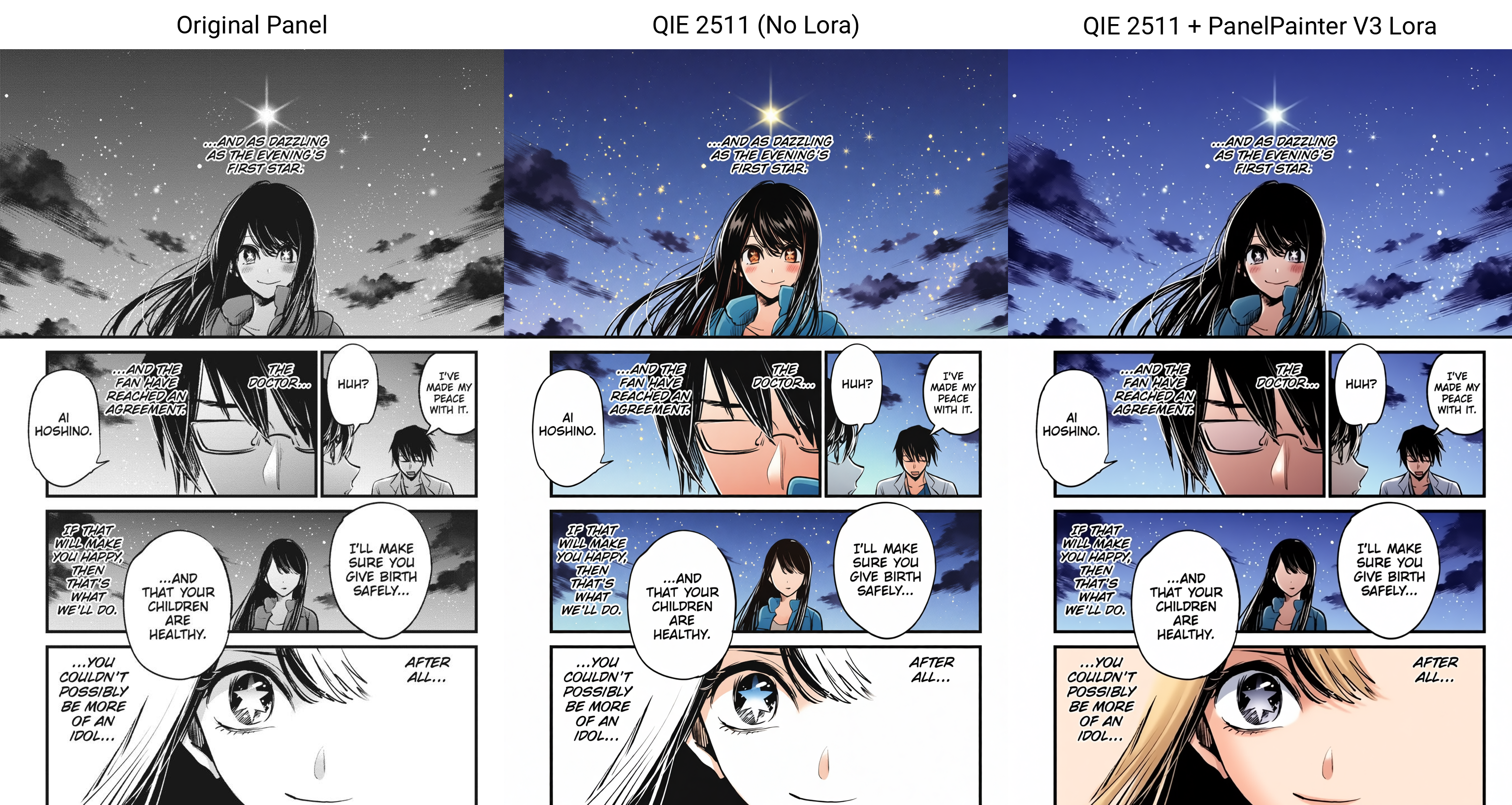

Showcase

Here are some examples comparing the original panel, the base Qwen Image Edit model, and the result with the PanelPainter V3 LoRA.

Showcase Generation Settings:

- LoRAs: PanelPainter V3 (Weight: 1.0) + 4-Step Lighting (Weight: 1.0)

- Steps: 4

- Sampler: Euler

- Scheduler: Simple

- Seed: 1000

- CFG: 1.0

Chainsaw Man

Frieren

Komi Can't Communicate

Oshi no Ko

Project Structure

This repository contains everything used to create the models:

1. LoRA Models (/loras)

This directory contains the model weights for all iterations of the project:

Trigger Word:

Color this panelpainter(Applicable for both V2 and V3)

- V3 (Latest Release):

PanelPainter_v3_Qwen2511.safetensors- Base: Qwen Image Edit 2511

- Note: The latest model trained on the expanded 903-image dataset.

- V2 (Stable):

PanelPainter_v2_Qwen2509.safetensors- Base: Qwen Image Edit 2509 (Compatible with 2511).

- Note: Standard release (High quality, low variety).

- V1 (Legacy):

PanelPainter_v1_Legacy.safetensors- Base: Qwen Image Edit 2509

- Note: Archived experimental version (synthetic data).

2. Training Logs (/logs)

Content: Tensorboard logs and charts from my training runs. You can check these to see how the loss converged and how the model learned over time for each version.

3. Workflows (/workflows)

Content: ComfyUI workflow JSON files to help you get started with PanelPainter.

4. Training Dataset

The datasets used for this project are hosted separately:

- PanelPainter-Dataset

Coming Soon: The V3 dataset was a good learning step for captioning, but it was randomly picked without any streamlined curation roughly 50% doujin and 50% mainstream colored manga. We're refining it further. Expect handpicked panels, better captions, and reduced doujin content. Release coming once quality standards are met.

Version History & Development Log

Version 3.0 (Current Release)

- Status: Released.

- Base Architecture: Qwen 2511.

- Strategy: Scaling Up High-Quality Data.

- Dataset: Expanded to 903 images. Recreated from scratch, comprising 50% doujin and 50% SFW panels.

- Summary: This version combines the correct "real line art" training method discovered in V2 with a significantly larger dataset. This improves the model's ability to generalize across different manga styles while maintaining the color quality of V2.

Version 2.0

- Status: Released / Stable.

- Base Model: Trained on Qwen Image Edit 2509, also it works on Qwen 2511 as well.

- The Breakthrough: After V1 failed, this version switched to training on real line art instead of synthetic grayscale.

- Dataset: A tiny, hyper-curated set of 150 images (70% Doujin / 30% SFW).

- Outcome: Despite the small size, it proved that high-quality real line art outperforms massive synthetic datasets. It produces good colors but lacks variety due to the small sample size.

Version 1.0

- Status: Archived / Deprecated.

- Base Model: Qwen Image Edit 2509.

- The Mistake: Trained on 7,000 images generated by simply desaturating colored pages (synthetic grayscale).

- Outcome: The model learned to color "perfect gray" inputs but failed on real, imperfect ink lines.

- Lesson: Quantity does not matter if the data distribution doesn't match real usage.

Training Configuration (V3)

Hardware: Trained on an A40 GPU on Runpod.

Below is the exact accelerate command used to train the V3 model on Musubi Tuner:

accelerate launch --num_cpu_threads_per_process 1 --mixed_precision bf16 \

/workspace/musubi-tuner/src/musubi_tuner/qwen_image_train_network.py \

--dataset_config dataset_edit.toml \

--dit /workspace/Training_Models_Qwen/Qwen_Image_Edit_2511_BF16.safetensors \

--vae /workspace/Training_Models_Qwen/qwen_train_vae.safetensors \

--text_encoder /workspace/Training_Models_Qwen/qwen_2.5_vl_7b_bf16.safetensors \

--model_version edit-2511 \

--network_module networks.lora_qwen_image \

--output_dir /workspace/output_panelpainter \

--output_name panelpainter_v3_part1 \

--mixed_precision bf16 \

--max_data_loader_n_workers 0 \

--learning_rate 3e-4 \

--network_dim 128 \

--network_alpha 128 \

--optimizer_type adafactor \

--optimizer_args "scale_parameter=False" "relative_step=False" "warmup_init=False" "weight_decay=0.01" \

--lr_scheduler cosine \

--lr_warmup_steps 150 \

--timestep_sampling qinglong_qwen \

--discrete_flow_shift 2.2 \

--max_train_epochs 8 \

--save_every_n_epochs 1 \

--save_state \

--gradient_checkpointing \

--gradient_checkpointing_cpu_offload \

--gradient_accumulation_steps 4 \

--blocks_to_swap 20 \

--sdpa

Dataset Settings: Use a resolution of 1328x1328 with bucketing enabled to handle varying aspect ratios (no upscaling). The training ran with a batch size of 1 and enabled qwen_image_edit_no_resize_control to preserve the original dimensions of the control images during processing.

License

- Project: Apache 2.0

- Dataset: Hosted separately, contains copyrighted manga panels.

- Copyright: Original art belongs to the respective creators and publishers.

Acknowledgements

Trained on Musubi Tuner. Thanks to kohya-ss.

Dataset Contributors: Thanks to @Rox_Jr & @lucifer_brine04 for their help with the dataset.

External Links

- Public Model Page: Civitai: PanelPainter

- Downloads last month

- -